This blog examines how detection precision, data diversity, and shared...

Read MoreStay Secure. Stay Compliant. Stay Ahead

RLHF (Reinforcement Learning from Human Feedback) + DPO (Direct Preference Optimization)

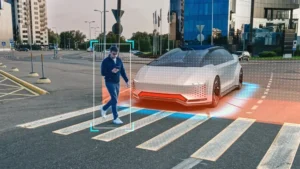

Smart Automation Meets Human Judgement for Safer AI

At Digital Divide Data (DDD), we help organizations create safer and more trustworthy digital experiences. Our Trust & Safety Services focus on protecting users and brands through practical solutions like identity verification, fraud and spam detection, brand safety checks, and content moderation. By combining smart automation with human expertise, we make sure risks are caught early, harmful activity is reduced, and AI systems can be used responsibly and safely.

Our Trust and Safety Services

Our holistic approach and excellence for digital trust and safety are reflected in our use-case offerings.

Scenario Identification & Mining

User Verification & ID Checks

Strengthen trust and security with advanced document verification, fraud flagging, and compliance checks to ensure every user is authentic and meets platform standards.

Spam & Bot Detection

Identifying fake accounts, automated bots, and suspicious posting patterns that disrupt user experiences and damage credibility.

Brand Safety & Ad Quality Review

Review ads and placements to guarantee alignment with brand rules, audience expectations, and industry standards.

Fraud Auditing & Verification

Mitigate financial and reputational risks through transaction reviews, account-level analysis, and proactive fraud prevention strategies.

Low-Severity Content Moderation

Filtering out profanity, harassment, and straightforward policy violations, keeping online spaces safe and respectful.

AI Data Labeling & Annotation for Safety

Bias Detection in Data

Label training datasets to identify and reduce gender, racial, and cultural bias in AI systems.

Toxic Content Tagging

Detect and annotate harmful language, hate speech, or inappropriate content to strengthen AI safety.

Context-Aware Annotation

Apply nuanced labels that help AI distinguish between context-driven safe and unsafe usage.

Prompt Engineering & Red Teaming

Vulnerability Testing

Design and execute prompts to expose weaknesses in generative AI systems.

Harmful Output Simulation

Stress-test models with adversarial prompts to detect unsafe, biased, or harmful responses.

Red Team Evaluation

Perform systematic red teaming exercises to uncover risks before deployment.

AI Output Validation & Fact-Checking

Validate AI-generated outputs against trusted knowledge sources to reduce factual inaccuracies.

Policy Compliance CheckingEnsure AI outputs meet ethical, legal, and organizational standards.

Human-in-the-Loop ReviewCombine automated checks with expert human validation for high-stakes content.

AI Bias & Fairness Audits

Discrimination Detection

Identify bias in model predictions across demographic groups.

Fairness Assurance

Run audits to ensure equitable treatment across use cases and applications.

Ethical AI Review

Evaluate models against fairness and inclusivity benchmarks to promote responsible AI adoption.

Model Safety Evaluation & Auditing

Performance Against Safety Standards

Evaluate AI models against predefined safety benchmarks to ensure reliability.

Risk Assessment Frameworks

Assess potential misuse scenarios and unintended consequences of deployment.

Ongoing Safety Audits

Continuously monitor and audit models post-deployment to detect new risks.

Data Labelled

500M+

Safety Critical Events Identified, Analyzed, and Reported at a Market-Leading P95 Quality Rating

Success Rate

91%

Pilot Projects Converted to a Full-Scale Production Pipeline

Cost Savings

35%

Top of the Line Cost Savings for ML Data Operation Customers

Time to Launch

10 Days

Time to launch a new Data Operations Workstream from ground-up, concept to delivery

Why Choose DDD?

We are more than a data labeling service. We bring industry-tested SMEs, provide training data strategy, and understand the data security and training requirements needed to deliver better client outcomes.

Our global workforce allows us to deliver high-quality work, 365 days a year, with data labelers across multiple countries and time zones. With 24/7 coverage, we are agile in responding to changing project needs.

We are lifetime project partners. Your assigned team will stay with you – no rotation. And as your team becomes experts over time, they train more labelers. That’s how we achieve scale.

We are platform agnostic. We don’t force you to use our tools, we integrate with the technology stack that works best for your project.

What Our Clients Say

Partnering with DDD has strengthened our fraud detection workflows and also helped us establish clear guardrails for safe content moderation. We now feel confident that our platform is secure and trusted by our users.

DDD provided both the expertise and the human touch we needed. Their hybrid model of automation plus human review caught risks we didn’t even know we had.

DDD helped us strengthen identity verification and transaction monitoring. Their approach was practical, scalable, and aligned with regulatory standards. We see them as a long-term partner in protecting our customers.

Working with DDD gave us the confidence to scale globally. Their brand safety and moderation solutions ensured that customer trust remained intact, even as we expanded into new markets. They feel less like a vendor and more like an extension of our team.

Blog

Deep dive into the latest technologies and methodologies that are shaping the future of AI

Building Robust Safety Evaluation Pipelines for GenAI

This blog explores how to build robust safety evaluation pipelines...

Read MoreEvaluating Gen AI Models for Accuracy, Safety, and Fairness

This blog explores a comprehensive framework for evaluating generative AI...

Read MoreHelping AI and Digital Platforms Remain Safe, Fair, and Future-Ready

Frequently Asked Questions

Trust & Safety services are designed to protect users, platforms, and brands from fraud, harmful content, abuse, and misuse of digital systems. At DDD, these services combine automation and human expertise to ensure safer online interactions and responsible AI deployment.

We use smart automation to handle scale, speed, and pattern detection, while trained human experts provide contextual understanding, judgment, and validation, especially for nuanced, high-risk, or edge-case scenarios. This hybrid approach delivers higher accuracy and reliability.

Our teams perform document verification, user identity checks, transaction reviews, and fraud auditing to flag suspicious behavior early, reduce financial risk, and help platforms meet regulatory and compliance standards.

Yes. We offer AI bias detection, fairness audits, toxic content tagging, and context-aware data annotation to help organizations identify risks, reduce bias, and improve the safety and inclusivity of AI models.

Prompt engineering and red teaming involve systematically testing AI models with adversarial and edge-case prompts to uncover vulnerabilities, unsafe behaviors, and bias before deployment. This helps organizations proactively manage AI risk.

We validate AI outputs against trusted sources using automated checks and expert human review. This human-in-the-loop approach reduces hallucinations and ensures outputs meet quality, policy, and compliance standards.

We follow strict data security protocols, compliance requirements, and client-specific governance standards to protect sensitive data throughout the engagement lifecycle.