This blog explores how to build robust safety evaluation pipelines for Gen AI. Examines the key dimensions of safety,...

Read MoreBuild Trustworthy AI Through Comprehensive Model Evaluation Services

Ensure your Gen AI models are accurate, fair, safe, and production-ready, through expert human validation

Human Intelligence Powering Responsible AI

Digital Divide Data (DDD) is a global data solutions partner helping organizations build, evaluate, and scale AI systems responsibly. We combine deep domain expertise, structured evaluation frameworks, and a highly trained global workforce to deliver reliable, unbiased, and high-quality AI outcomes at scale.

Data Types We Cover

Evaluate NLP, reasoning, summarization, generation, and conversational accuracy.

Assess visual understanding, classification, detection, captioning, and reasoning accuracy.

Test temporal understanding, action recognition, scene interpretation, and event consistency.

Assess speech recognition, transcription quality, sentiment, and audio understanding.

Test temporal understanding, action recognition, scene interpretation, and event consistency.

Our Model Evaluation Solutions

Accuracy Testing

Measure how correct the model’s outputs are compared to ground truth (e.g., correct answers, facts, logical reasoning).

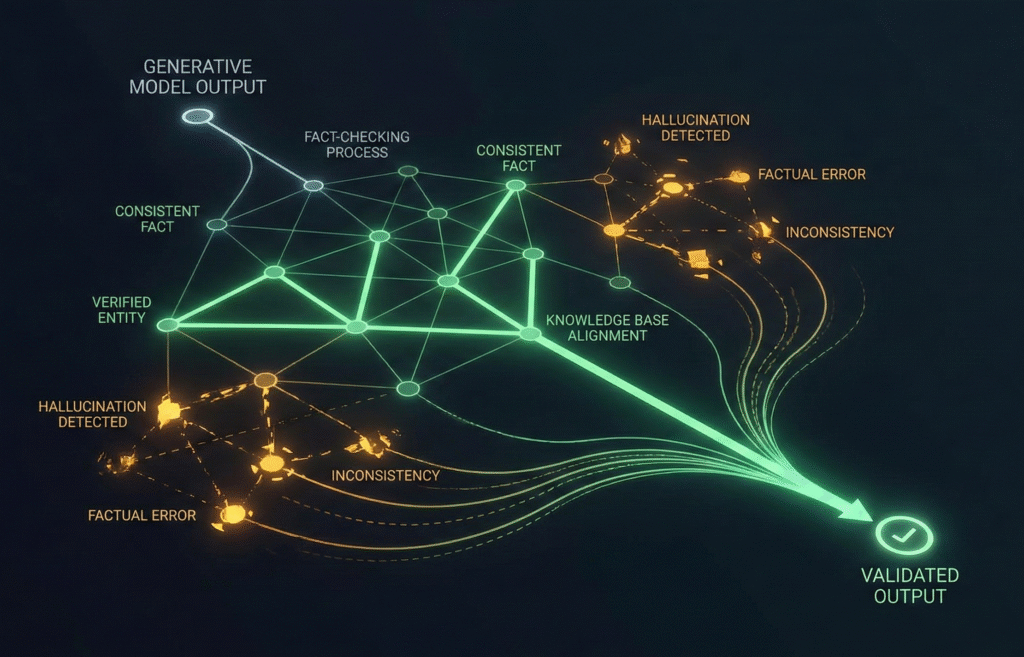

Factual Consistency Evaluation

Test whether the model generates factually accurate information and reduces hallucinations.

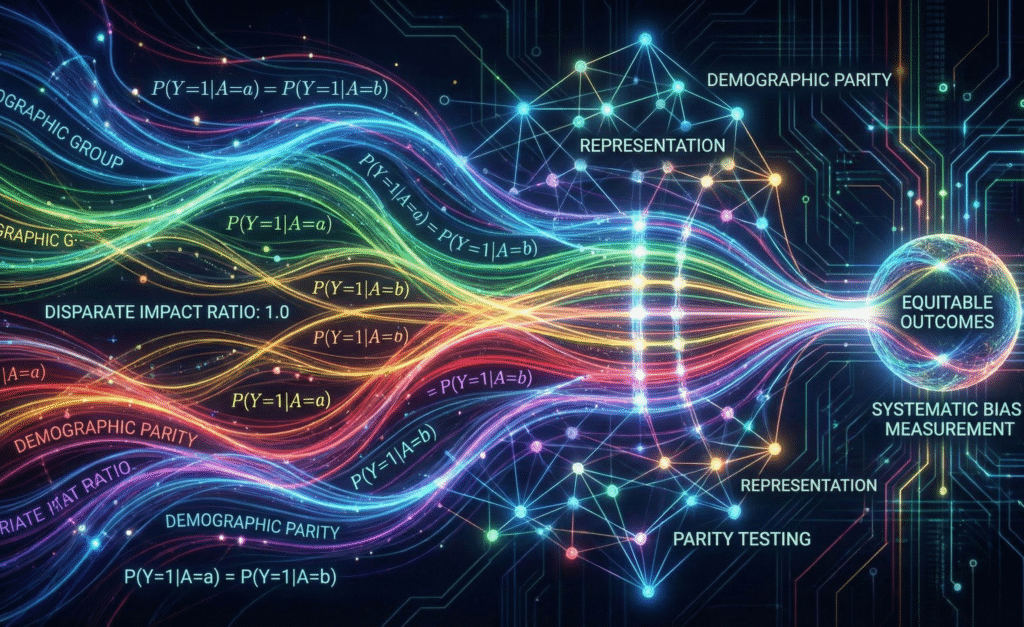

Bias and Fairness Assessment

Verify if the model’s outputs exhibit bias based on factors such as gender, race, culture, geography, etc.

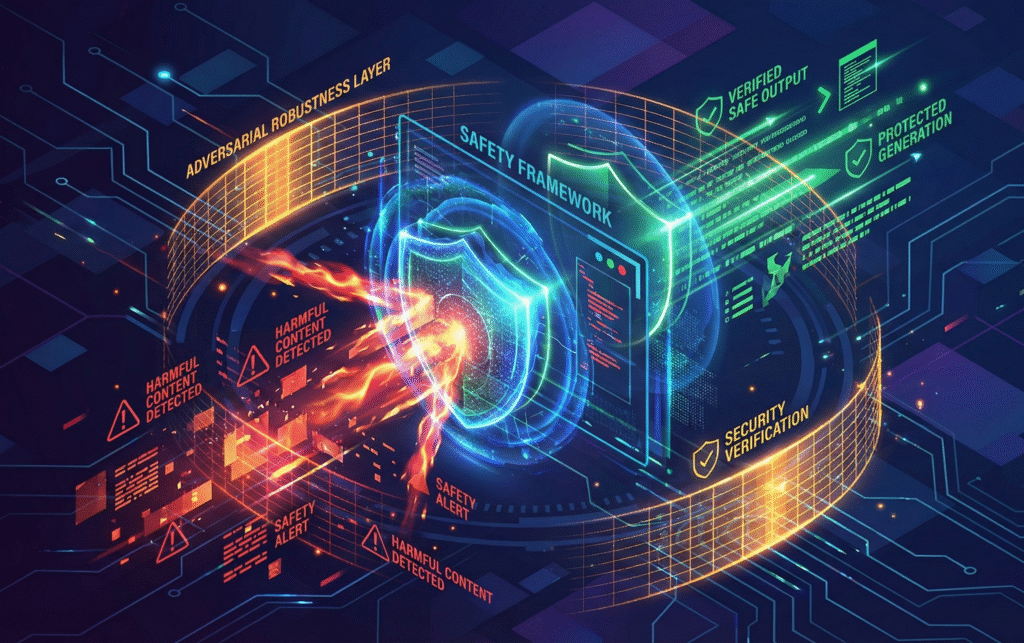

Toxicity and Safety Testing:

Evaluate if the model produces harmful, offensive, or dangerous content.

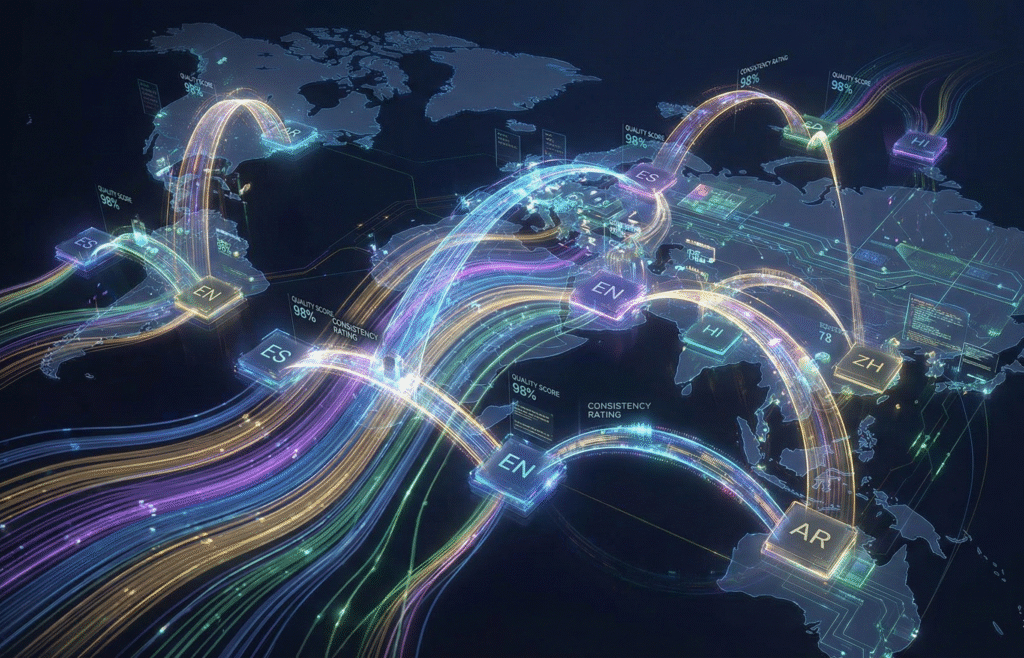

Multilingual and Localization Testing

Test model performance across different languages, dialects, and cultural contexts.

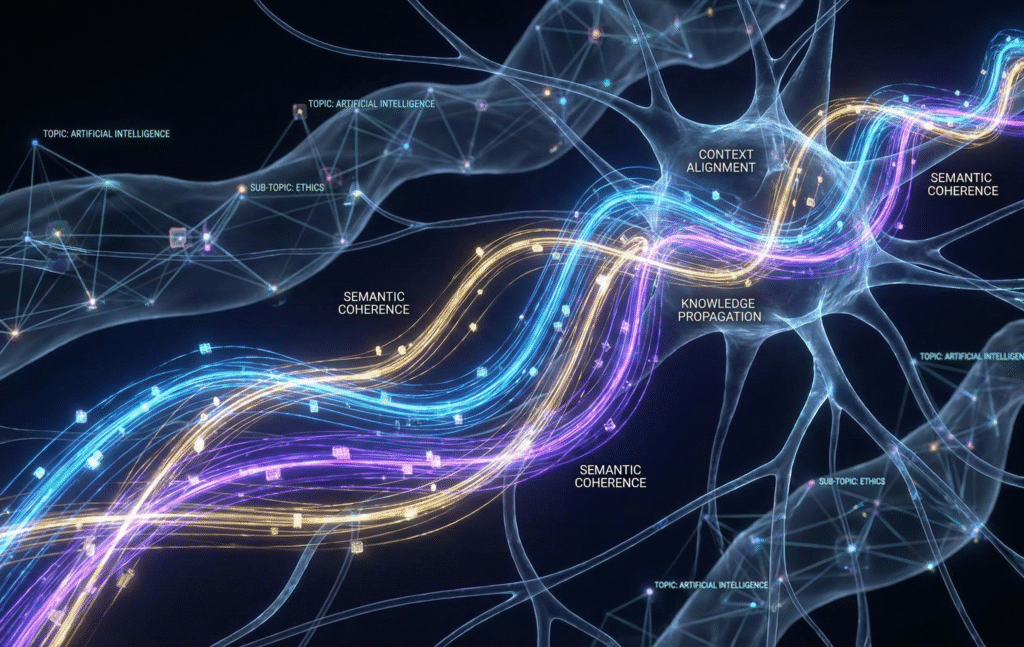

Response Relevance and Context Awareness

Evaluate whether the model’s answers stay on-topic, logical, and appropriate to the conversation or input.

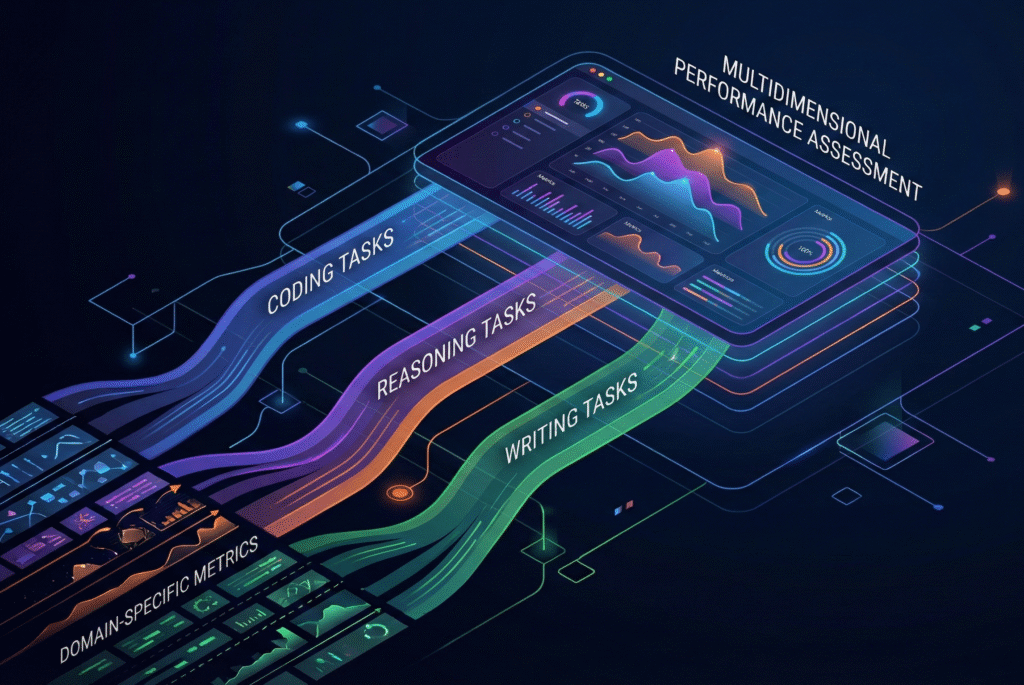

Task-Specific Evaluation

Measure model performance on specialized tasks like code generation, summarization, translation, image captioning, etc.

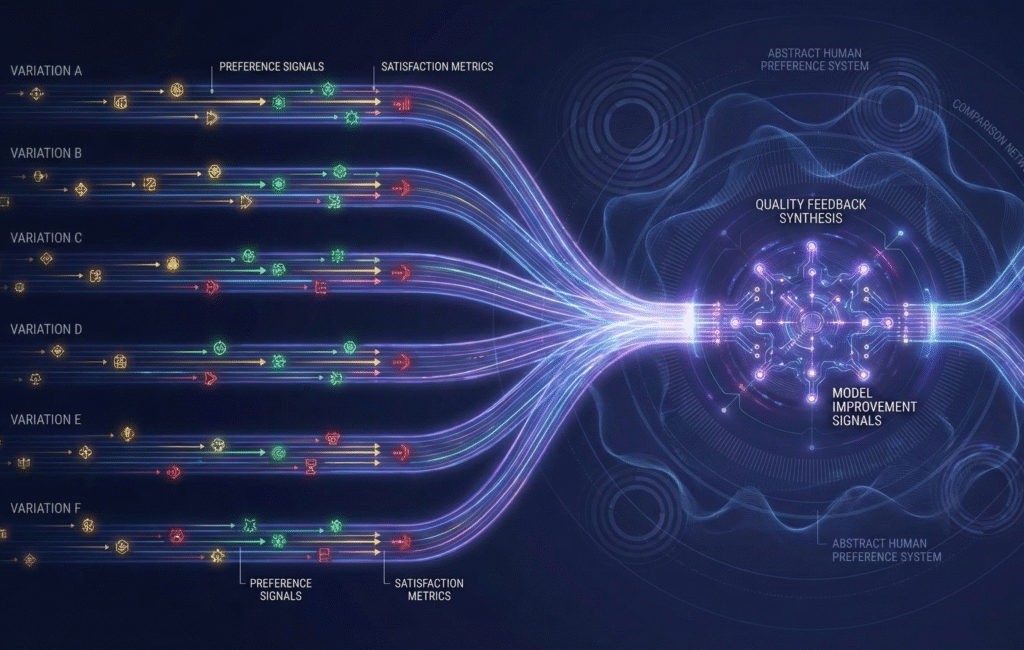

User Preference and Satisfaction Testing

Collect human feedback (e.g., ranking outputs) to see if users find the model’s responses helpful and high quality.

Fully Managed, End-to-End Model Evaluation Workflow

From one-time evaluations to continuous, always-on pipelines, DDD manages the complete model evaluation lifecycle

Define evaluation objectives, risk areas, benchmarks, and success criteria.

Develop test cases, prompts, edge scenarios, sampling strategies, and scoring rubrics

Train domain-specific evaluators aligned with your model goals and guidelines.

Run evaluations across modalities with real-time tracking and progress visibility.

Validate results, analyze failure patterns, and surface actionable insights.

Deliver structured reports and integrate learnings into the next evaluation cycle.

Why Choose DDD?

We combine structured evaluation methodologies with trained human evaluators who understand nuances, context, and domain complexity, which is critical for assessing reasoning, bias, and alignment.

Our distributed workforce enables multilingual and localization testing across languages, dialects, and regions, ensuring your model performs reliably in global markets.

Whether you need a one-time audit or continuous evaluation pipelines, DDD scales with your model lifecycle, from pre-deployment validation to post-launch monitoring.

Platform-agnostic by design. We integrate with your tools, workflows, and infrastructure, never forcing proprietary systems.

What Our Clients Say

The structured evaluation reports gave our leadership confidence to deploy.”

DDD’s model evaluation uncovered accuracy and bias issues we would have missed with automated testing alone. Their human-in-the-loop approach gave us the confidence to deploy our AI system in production.

DDD helped us benchmark performance across safety, factual consistency, and multilingual behavior at scale. Their insights directly influenced our model selection strategy

DDD’s evaluators quickly learned our domain and continuously improved the quality of feedback across each evaluation cycle.

DDD’s Commitment to Security & Compliance

Your data is protected at every stage through rigorous global standards and secure operational infrastructure:Your sensitive data is protected at every stage through rigorous global standards and secure operational infrastructure.

SOC 2 Type 2

ISO 27001

GDPR & HIPAA Compliance

TISAX Alignment

Blogs

Deep dive into the latest technologies and methodologies that are shaping the future of Gen AI.

Evaluating Gen AI Models for Accuracy, Safety, and Fairness

This blog explores a comprehensive framework for evaluating generative AI models by focusing on three critical dimensions: accuracy, safety,...

Read MoreThe Art of Data Annotation in Machine Learning

Data Annotation has become a cornerstone in the development of AI and ML models. In this blog, we will...

Read MoreResponsible AI Starts with Rigorous Evaluation

Frequently Asked Questions

DDD evaluates both enterprise models (custom LLMs, copilots, decision systems) and foundation models across text, image, audio, video, sensor, and multimodal use cases.

Automated benchmarks miss nuance. DDD combines human expert evaluation with structured frameworks to assess reasoning quality, bias, safety, context awareness, and real-world behavior that automated metrics alone cannot capture.

Yes. We support pre-deployment validation, post-deployment audits, and continuous evaluation pipelines to monitor model performance as data, prompts, and use cases evolve.

Absolutely. DDD conducts evaluations across multiple languages, dialects, and cultural contexts, helping ensure your model performs consistently and appropriately in global markets.

We design targeted test cases and scenarios to identify bias related to gender, race, culture, geography, and socio-economic context, and provide actionable insights to reduce risk and improve alignment.

Our trained evaluators follow standardized guidelines, scoring rubrics, and quality checks, with multi-layer reviews to ensure consistency, reliability, and high-signal feedback.